TL;DR

- Driving SEO changes is challenging

- Understand how to prioritise the 404 errors fix

- SEO and Data Science are an effective blend

Many clients understand that SEO is a marathon, not a race. Others expect faster results.

No matters what, SEO is always the one discipline that has less budget and resources, and SEOers are often relying on a one-man-band in need to prove efficacy & demonstrate ROI.

Driving changes, however, can be challenging especially when there is a risk of breaking things, or when there are conflicting priorities. More often than not, SEOers need to provide a business case for what is required; and this isn’t all about monetary value, but also a matter of traversal value.

Aside from questioning whether search engines will care about a particular change, it is always fair to ask a couple of questions:

- How many other projects will positively be impacted by the proposed change?

- What will be the impact of leaving an issue unfixed?

Is fixing 404 errors a priority?

If some URLs on your site 404s, this fact alone does not hurt your site, and search engines like Google’s will not penalise you for this.

However, addressing 404s may be still valuable. Whether your errors was because a misspelt URL or a legitimate page now gone, having a look at the 404 errors it may bring up to your attention unforeseen circumstances you may want to address differently. For instance, a page that has gone but was replaced with something better can be redirected instead of suggesting to the engine for their removal. Thus without forgetting about the detrimental user experience.

How can I determine the priority in fixing the 404s?

There’s only limited time in the day, which means not everything can get done (especially with a strangled dev team bogged down due to insufficient capacity). The “good” part of your 404s is that you are unlikely to speak with the dev at all. Instead, you may need to talk with the content team, or whoever is in charge of managing the CMS, which it maybe you.

Irrespective of who is going to make changes, before discharging this as a fix, you may still need to understand the size of the “opportunity” by gathering numbers. These can be collected with different tools, from the very basic Google Search Console, to an online crawler down to your favourite desktop app.

In my case, whether you like it or not, I stick to Screaming Frog since a while, which I conveniently use also for Enterprise SEO audits.

Dan and his team have covered a lot in the past circa the 404 identification, which can also be used for link building opportunities. They have even published a video in December 2019.

Screaming Frog is a good tool that evolved a lot in recent years. Yet, like any other software (or business out of there), they have to prioritise their time and feature development. This sometimes (often??) comes with certain limits that have to be addressed differently.

Two of them, IMHO, are:

- the usefulness of the information exported, which lack a summary, and

- the possibility to easily subset data

A Python script to complement Screaming From 404 export

Here we are at the core of this article, proposing my solution in respect of the 404 errors prioritisation.

In recent months, I found myself more and more involved with content production and teams support to address broken links and redirect. And before return to these teams, I need to put together specific insights to let them estimate the size of the work. In a nutshell, I found myself repeating over and over again the same actions: crawling, exporting into Excel, opening the file, intersecting data and producing a small report to get numbers ready to be pasted into an email and the final report more actionable.

Time is precious, so given the fact I needed the information repeated several times for several markets, after a couple of manual reports, I ended up on automating things.

The solution proposed is a conversation starter, just the first part of what I did; something still good that I’m confident it will find a positive consensus among some of my colleagues.

What does the Python script do?

The code - conveniently shared in a Jupyter Notebook - is nothing complicated at all. Yet it was an interesting way to remove some dust from my coding skills, discovering new functionalities of the language like the Walrus operators; a beautiful example of how SEO and Data Science now compliment each other.

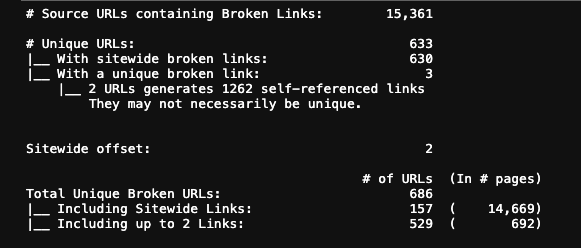

With the use of Pandas, a Data Science package, after having loaded an Excel spreadsheet, I do filter out the All inlinks report by 404 and 410 errors and do some query to produce plain simple text output. Nothing more, nothing less.

Once I figured out what I felt to be the best approach to segment data (in relation to my needs), I have also become more productive in extracting information and return to the teams on where to find the broken links and whether or not some of them could have been ignored. Determining the size of sitewide links have become substantially more efficient.

What is next?

My solution is far to be a masterpiece, and I’m sure it has elements of improvements. But I felt nice sharing the code to allow people considering this as a part of their daily SEO.

Would Dan and his team consider my approach as a suggestion for future implementation? That would be fantastic, but this is not the main reason everything was put together, though - I appreciate - leading by example often proves to be the most effective way to achieving things.